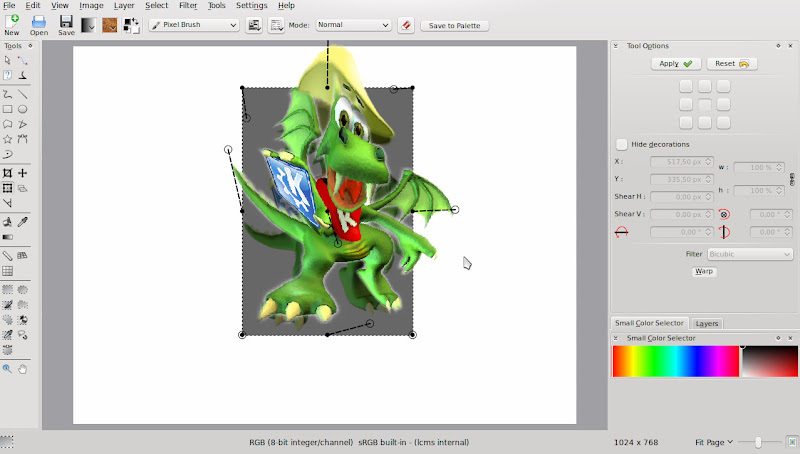

GSoC: At last, image warping !

I spent the past 10 days working on image warping. It wasn't easy, but I think most of the work is done !

Since I had absolutely no idea concerning the algorithm, and wasn't even sure what I wanted to do - I only knew I wanted to add an "image deformation" functionality to the tool -, I began searching randomly on the web. I eventually came across a nice paper entitled Image Deformation Using Moving Least Square. Apart from the snappy pictures at the top of the front page, what I liked was that it gave implementation details and put the emphasis on the speed of this method. Basically, it describes 3 different math functions (affine, similitude, and moving least squares) which distort an image according to the modifications made on a set of points.

I thought I could give it a try and began the implementation of the first one, i.e. affine deformation. It essentially consisted in some operations on 2D vectors and 2x2 matrices. Applying it roughly on a QImage produced something so horrible that I decided to take a closer look at the paper : there was actually a small mistake in the paper (a coefficient disappearing between 2 lines, nothing serious). Here is the kind of things I obtained after fixing the calculation :

I expected something like this : those "artefacts" you can see are actually pixels which have not been given a color. The thing is, the fact that you iterate over every pixel of the source image doesn't mean that you will "light up" every pixel of the destination image. It is actually quite a classic problem. Most of the time, the simplest solution is to iterate over every pixel of the destination image (instead of those of the source), and light them up with the correct color ; that is, provided that you can invert the function ("what pixel (x,y) of the source will go to pixel (x',y') of the destination ?"). Unfortunately, it's not the case here : that is problem #1. Problem #2 is the speed. Applying the transformation function to every point of the source is too slow to compute a preview in real-time : it took about 2 seconds to compute the image.

Turns out both problems could be solved simultaneously if I found a way to fill the blanks left when applying the function on the pixels of the source. In fact, the image can be seen as a set of quads which are transformed into other quads by the function, so filling the blanks boils down to interpolating the inside of these quads. To speed up the process, I could apply the deformation function to only some points of the source (like 1 over 5 or even less), and interpolate the inside of bigger quads, based on the hypothesis that interpolating quads is faster than applying the transformation function. It doesn't really matter if the preview is not 100% accurate (that's the a point of using a preview).

The first step was to be able to do something similar to filling a quad (or more generally a polygon), given its vertices. While Qt can fill a polygon, there is no way to customize the operation applied on each pixel of the polygon (as far as I know). I came to the conclusion I had to write my own method to fill a polygon, and it had to be fast. Fortunately, I wrote something like that this year for a project for my school of engineering (it was in Ada though) - a scanline fill polygon procedure with Sutherland-Hodgman algorithm for clipping -, and I was quite proud of its speed. I translated it in C and tried to fill the quads with the color of their top left vertices (for starters).

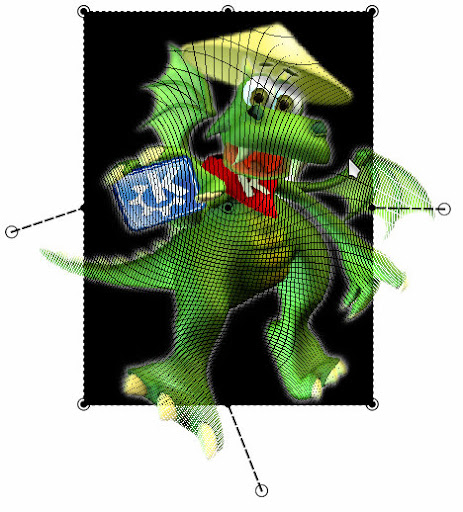

Here is an example before quad filling, with the transformation function applied to 1 pixel over 3 :

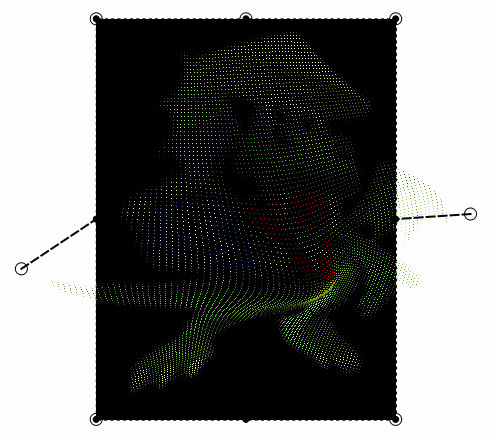

Here is one image I obtained after quad filling :

You can see that even if it's pretty ugly - there is no interpolation yet -, it's working ! The positive aspect is that filling the blanks is indeed faster than applying the transformation function to every pixel. The negative one is that it isn't fast enough : I have to go up to 1 pixel over 10 to have something relatively smooth (and of course it's even more inaccurate and ugly). My function seems however as fast as Qt's (though I didn't benchmark anything).

I hoped that maybe it would be more decent if I applied a bilinear interpolation to fill the quads, based on the color of their 4 vertices. Here is what I got, with only a minor decrease of the speed :

The result was clearly not good enough, so I gave it a little more thinking. Eventually, I realized it wasn't quite logical that I used only the colors of the 4 vertices of a quad to fill it : I mean, there must be a way to establish that pixel (x',y') of the destination quad should be of the same color as pixel (x,y) of the source quad.

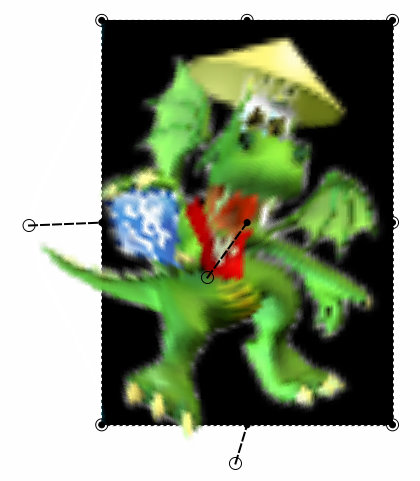

Actually, Qt is able to transform a quad into another quad (using the QTransform class), which means it can associate each point of the destination quad to the corresponding point of the source quad. I was a bit concerned about the speed, as for each quad, I would have to make Qt compute the QTransform object (the object that describes the inverted transformation : from destination to source), and then apply it to every pixel of the destination quad, but it turned out the result was so much better than expected that I could increase the sizes of the quads a LOT and still have an accurate preview. In fact, I can apply the transformation function to only 1 pixel over 20 ! And here is how the preview looks like :

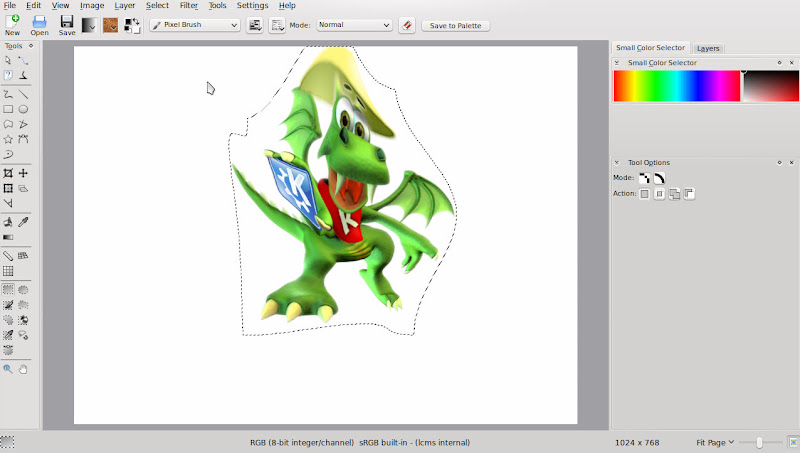

On my computer it IS fast and smooth :). The result you can see on the first screenshot of this post is obtained (after the user clicks "Apply") simply by applying the transformation function to every pixel, and filling the quads with the same method.

Now I still have to improve the UI and add the two remaining transformation functions (I only implemented affine transformation, there is still similitude and moving least squares).